Image credit: Pixabay

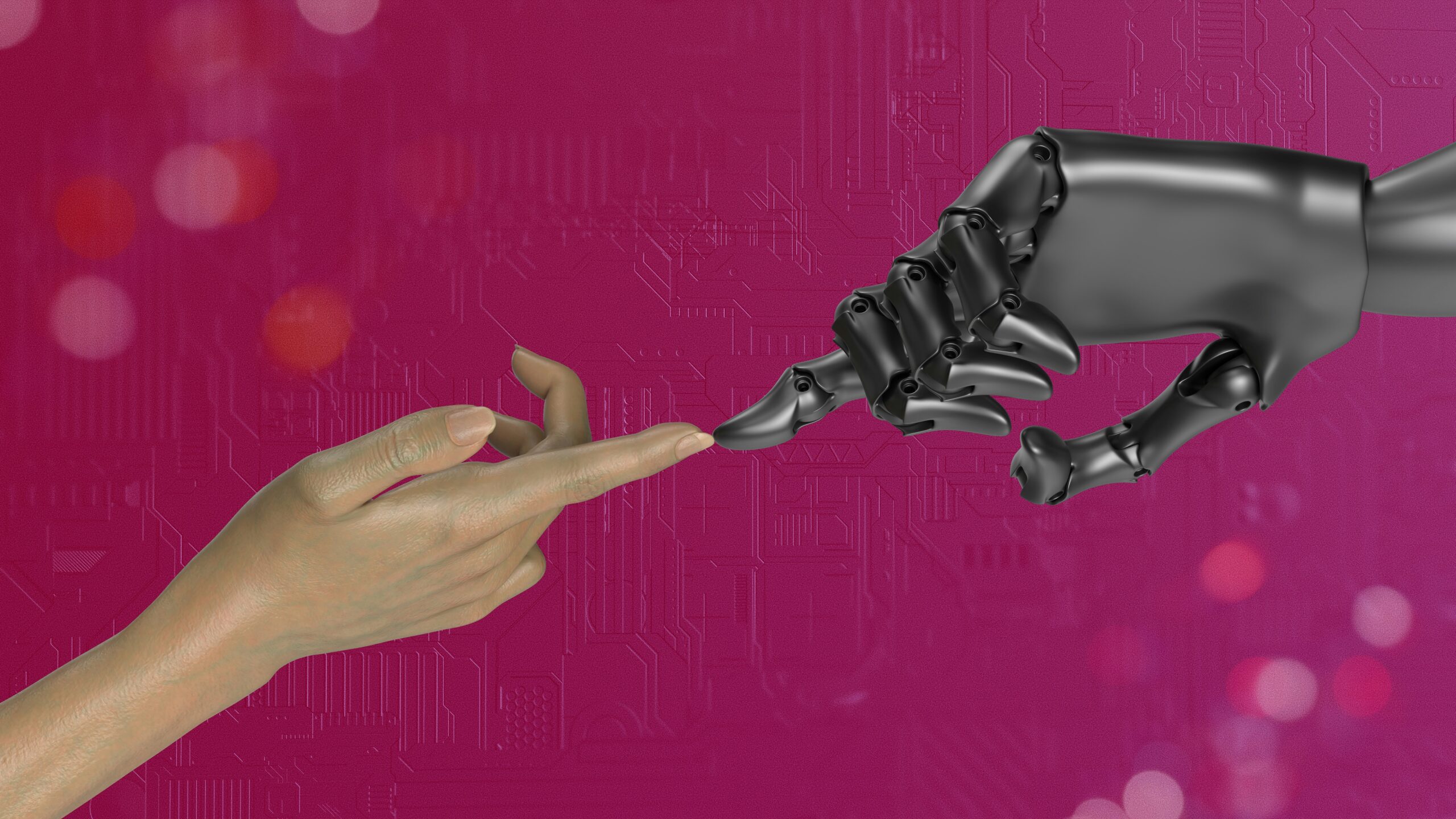

Artificial intelligence is no longer limited to streamlining business operations. The technology is dominating daily workflows in organizations across industries. While companies are increasingly utilizing AI tools in their day-to-day tasks like analytics and communication, AI literacy is becoming imperative for the team. The ability to understand, evaluate, and effectively use AI systems is becoming a highly demanded skill for professionals.

During the 1990s, proficiency in Excel was non-negotiable in any workplace. Similarly, AI literacy is now emerging as the modern equivalent of digital fluency. Employees across industries are already engaging with AI systems, often without any formal training. This informal adoption shows both the promise and the peril of a rapidly evolving technology where only a few are taught to use it optimally.

Why Does AI Literacy Matter Now?

The widespread integration of large language models (LLMs) like ChatGPT has transformed the way teams communicate, brainstorm, and execute tasks. These tools are streamlining operations and enhancing productivity, but their usage comes with new risks. Without clear understanding or guardrails, untrained employees often expose sensitive data, including personally identifiable information (PII), protected health information (PHI), and proprietary company data while utilizing these tools.

This gap between the integration of AI tools and the knowledge to use them is widening. As AI tools have made their way into everyday workflows, a few organizations have already structured learning programs to explain the functionality of these tools to their teams so they can use technology responsibly. This is how AI literacy is resolving the growing tension between innovation and risk management.

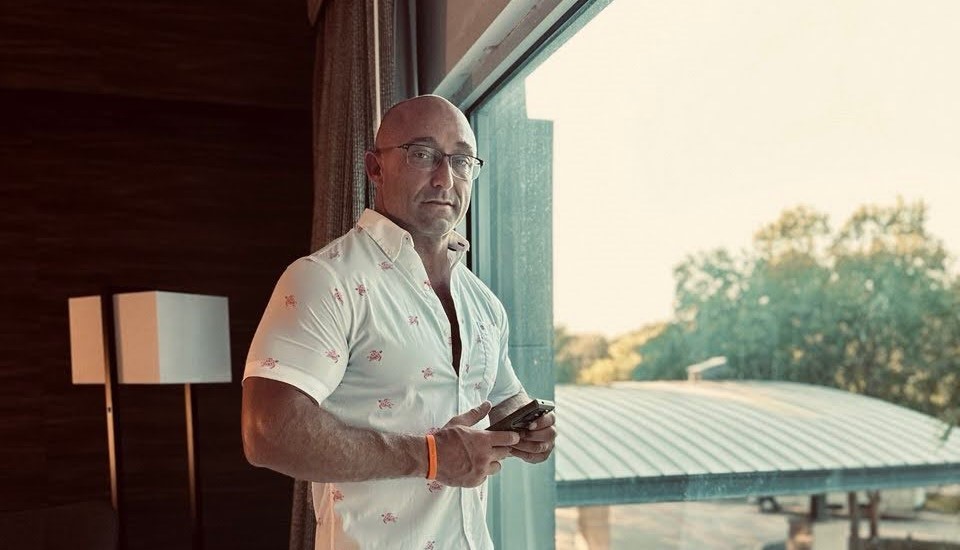

Roberto Planos, Director of AI and Risk at Framework Security, notes that organizations often underestimate how deeply AI has already entered daily workflows. “The cat is out of the bag,” he said. “People will be using it regardless, and for companies to think otherwise is a little bit naïve.” He also points out that while LLMs feel new to the public, AI is not a new discipline. “Its roots are in the 1940s. Large companies have been running machine learning models for decades.”

The Skills Gap: A Quiet Risk

Executives broadly recognize AI’s potential but often lack a roadmap for building literacy within their teams. This absence of strategy creates a quiet but significant risk. True AI literacy extends beyond knowing how to generate text or analyze data. It includes understanding how prompts influence outputs.

Planos defines AI literacy as both practical and conceptual. “Prompt writing is important, but employees should also know what parameters they can set, like adjusting temperature or how to assign a model a business-specific role.” Beyond prompts, he recommends a basic understanding of machine learning and foundational models. Since every industry uses AI differently, he says leaders must define what “baseline literacy” means for their own organization.

Equally vital is a foundational grasp of machine learning and generative model concepts, skills that help employees discern when to trust AI outputs and when to apply human oversight. In an era where decisions are increasingly data-driven, ignorance is no longer benign; it can compromise both operational integrity and organizational security.

Navigating the Risks

Framework Security assists companies in adopting safe AI practices through comprehensive risk assessments and governance recommendations. Their approach aligns with ISO/IEC 42001, the world’s first international standard for AI management systems, which emphasizes scalability and continual improvement.

According to Planos, ISO/IEC 42001 stood out because it is “use-case agnostic and scalable regardless of an organization’s size.” In nearly every assessment Framework conducts, he sees the same pattern: “a lack of pinpointed, AI-specific training.” He recommends that companies fold quick modules on prompt hygiene and LLM expectations into existing InfoSec programs so that governance rises with innovation rather than trailing behind it.

“I think that, including a baseline skill set around, at minimum, the use of large language models… would push organizations further or faster,” said Planos. His insight reflects a growing consensus that AI competence should be embedded in organizational culture, not treated as a niche technical capability.

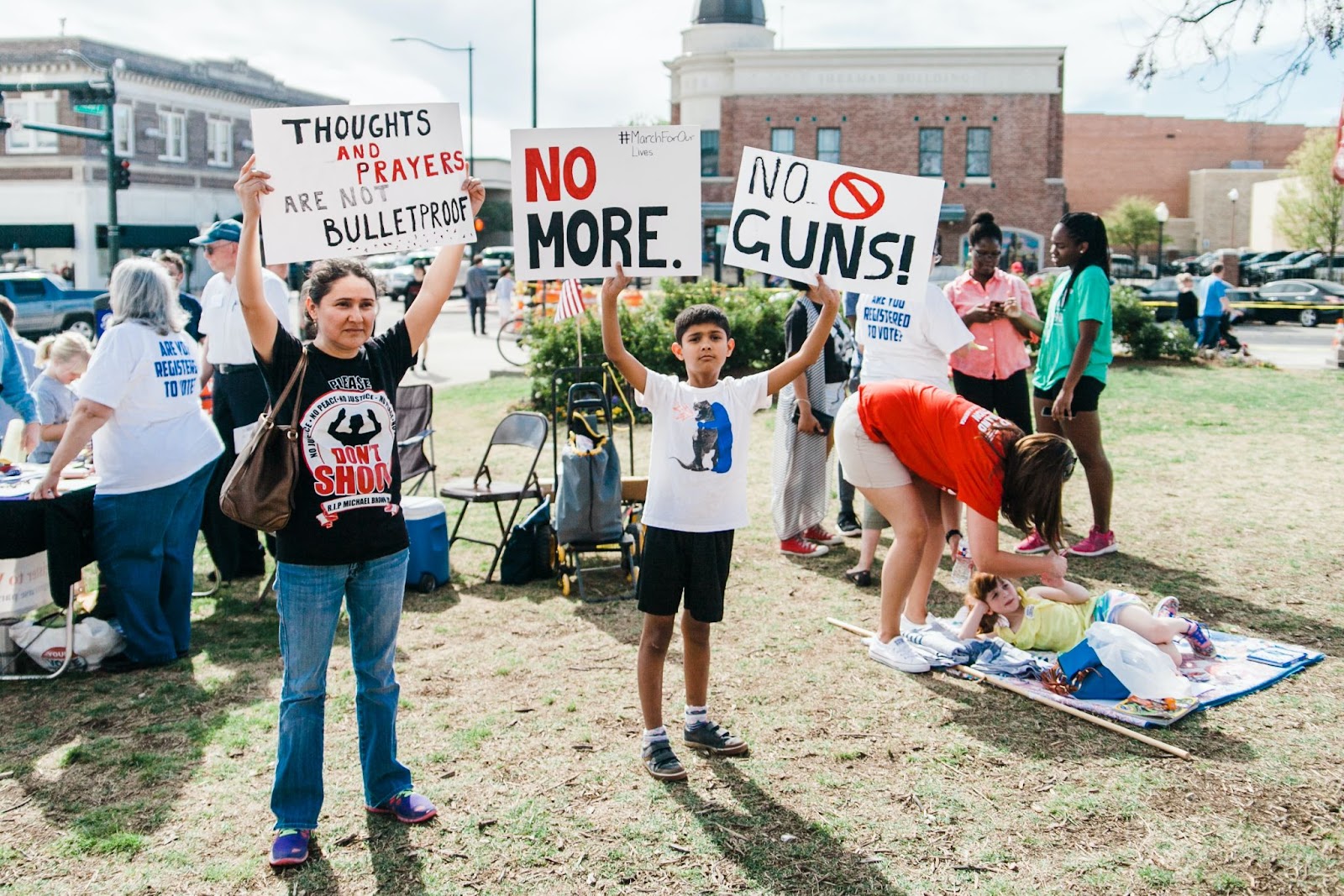

Planos emphasizes that the most urgent risk is not model misuse, but data loss. “The biggest risk is data leakage,” he said, citing PHI, PII, and confidential business information as the most vulnerable. He recommends implementing administrative safeguards so that “anything that has the shape of personal information is automatically redacted before outputs reach users.”

Best Practices for Responsible AI Adoption

Forward-thinking companies have started including AI literacy in their core training programs. Weaving AI education into onboarding and information security (InfoSec) programs, developing dedicated AI acceptable use policies, and creating controlled internal AI environments are now becoming the most effective strategies in AI literacy.

These measures are not only mitigating risks associated with the use of evolving technology but also empowering teams to leverage AI confidently and responsibly. Some organizations are exploring in-house AI agents and private LLM deployments.

Planos says the most effective programs treat AI training as an extension of the company’s security culture. He suggests adding short AI modules into onboarding, integrating LLM guidance into existing InfoSec training, and ensuring teams have access to specialists such as data scientists or ML-focused DevOps engineers. “There’s no boilerplate list of minimum skills,” he said. “Each organization must define its own baseline.”

From a governance standpoint, Planos recommends making AI policies part of the core acceptable use policy rather than an appendix. “Your AI acceptable use policy should be part of your existing acceptable use policy, not an afterthought,” he said.

Building Proactively, Not Reactively

AI is not a fleeting trend; it represents a major shift in the way organizations think, work, and compete. Companies that are investing in structured AI upskilling will be better positioned to maintain efficiency, security, and innovation in the coming years.

The challenge is not to resist the use of AI tools but to embrace them with clarity, governance, and intent. By considering AI literacy as a universal skill, organizations can future-proof their workforce and harness the full potential of technology without compromising safety or trust.

Planos sees this proactive mindset gaining momentum across industries: “Having that ethos from the start is really encouraging.”