Image credit: Pexels.com

Mental health care is one of the few sectors where technological disruption is both inevitable and deeply uncomfortable. The stakes are higher than efficiency. The cost of getting it wrong is human trust. As artificial intelligence pushes deeper into healthcare, mental health sits at the fault line, testing whether automation can coexist with empathy, judgment, and ethical restraint.

What is emerging is not a battle between humans and machines, but a sorting exercise. The most credible voices in the field are not asking whether AI belongs in mental health. They are asking where it must stop. The difference between support and substitution has never mattered more.

Across psychology, coaching, and AI infrastructure, a shared philosophy is taking shape: AI should absorb friction, not authority. It should reduce burnout, not redefine care. Used responsibly, it can protect clinicians from collapse and widen access without pretending to replace the human relationship at the core of healing.

AI as a Support Tool, Not a Therapist

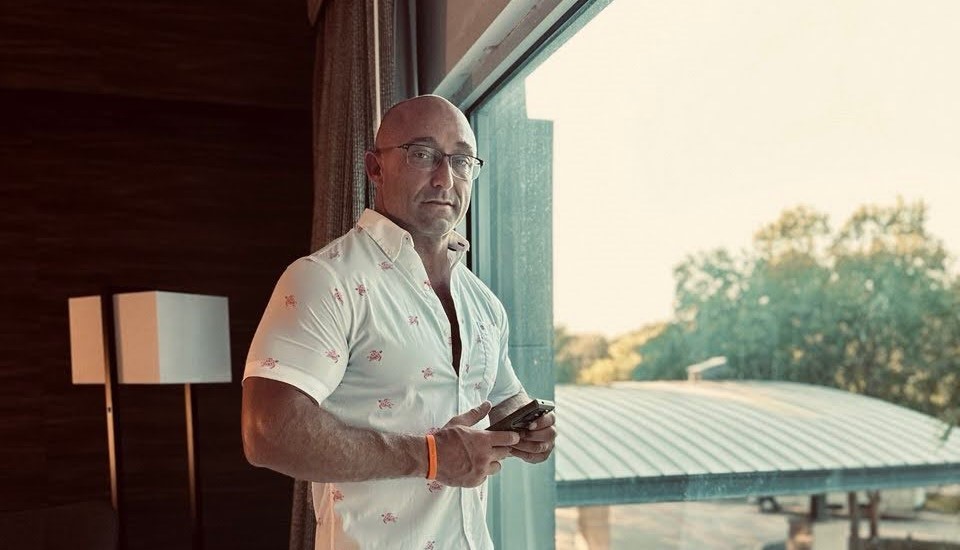

Damien Adler approaches AI from the inside of the system it aims to support. As a practicing psychologist and a health-tech founder, he has lived the administrative burden that quietly drains clinicians long before burnout becomes visible. His view is blunt and deliberately narrow: AI should draft, not decide. Anything more is a category error.

In practice, Adler argues, the biggest threat to mental health care today is not technological overreach but exhaustion. Clinicians are overwhelmed by documentation, compliance, and post-session admin that fractures their attention during the very moments presence matters most. “It actually enhances the clinical relationship by reducing the workload,” Adler says. “It allows clinicians to be fully present in the session instead of splitting attention between the client and the keyboard.”

That distinction is operational. AI systems at Zanda are designed to listen, summarize, and draft notes into clinician-defined templates after a session ends. What once took 15 or 20 minutes now takes under a minute. The result is not faster care, but clearer care. “Imagine having a conversation with a friend and they’re taking notes the whole time,” Adler says. “It changes the dynamic. That’s what clinicians have accepted for decades, and it doesn’t have to be that way anymore.”

Where Adler draws the line is decisive. AI cannot run a session, read body language, or decide when to challenge a client. “Therapy requires knowing when to push and when to sit back,” he says. “That comes from education, experience, and reading what isn’t said. AI can mimic rapport, but it doesn’t understand the human experience.” In mental health, agreeable is not the same as helpful.

That clarity extends to data and privacy. Adler insists that clinical content must never be used to train public models. Zanda’s AI runs in isolated environments with strict guardrails against hallucination or creative inference. “AI wants to be helpful,” he says. “So you have to be very clear about its role. It summarizes what happened. It does not invent what should have happened.” In mental health, restraint becomes the main feature, not the limitation.

Emotional Buffering and Sharper Focus

Daniel Hindi does not come from clinical care, but his argument lands squarely on mental health’s pressure points. As the CEO of AI chatbot creation platform noem.AI, Hindi’s work in customer support automation exposed a quieter truth about burnout: it is not just workload that breaks people, but emotional volatility without recovery time.

“If AI can replace your job, it should,” Hindi says, then reframes the provocation. “AI is probably replacing the part of your job you hate.” What humans lose, he argues, is not purpose but repetition. What they regain is the ability to think clearly and respond with intention instead of fatigue.

Hindi describes AI as an emotional buffer, not because it removes feeling, but because it absorbs volatility. In customer-facing roles, AI fields the initial frustration, extracts the signal from the noise, and hands humans a clean problem to solve. “I don’t have to go through the emotional rollercoaster,” he says. “By the time it lands on my desk, I can actually help.”

That buffering has a downstream mental health effect. Burnout is not only physical exhaustion but cumulative emotional depletion. “If I’m emotionally drained all day, I start sounding apathetic,” Hindi says. “Not because I don’t care, but because I can’t keep riding that rollercoaster.” AI stabilizes the environment so people can show up as their best selves more consistently.

Counterintuitively, Hindi believes AI can restore humanity to work. When people are rushed, empathy is the first casualty. AI can draft thoughtful responses when humans lack bandwidth, inject clarity where tone might be misread, and remove cultural or language friction that compounds stress. “People say AI removes empathy,” he says. “What I see is AI making space for it.”

For mental health, the implication is indirect but powerful. Systems that reduce emotional overload upstream create conditions where care work, whether clinical or adjacent, becomes sustainable again. The goal is not to automate empathy, but to protect it.

The Risks of Direct-to-Consumer AI Tools

If there is a counterweight to AI optimism in mental health, Corey Turnbull provides it. As both a registered psychotherapist and founder of the CanAI Institute, his concern is not theoretical misuse but everyday normalization of tools never designed to carry emotional authority.

Turnbull distinguishes sharply between professionals using AI within scope and clients using AI without context. “The average client will take it as an authoritative source,” he says. “And that’s problematic.” AI’s nonjudgmental tone feels therapeutic, but it lacks the capacity to challenge, redirect, or hold ethical responsibility.

The danger, Turnbull argues, is validation without friction. “Therapists don’t just listen. They challenge,” he says. “AI is famously not challenging.” In vulnerable users, constant affirmation can reinforce delusions, unhealthy behaviors, or dependency. Worse, people may begin preferring AI interactions over human ones, deepening isolation in a society already struggling with it.

Privacy is another fault line. Most public AI systems do not meet clinical confidentiality standards. “Whatever you type in can be used as training data,” Turnbull warns. “Clients don’t read the privacy policies, but as professionals, we know that matters.” In crisis situations, reliance on AI can be actively dangerous. A chatbot cannot dispatch local emergency resources or assess imminent risk.

Turnbull’s response is not prohibition but literacy. He is developing frameworks to help therapists evaluate how clients use AI and whether it supports or undermines treatment. “We need to ask how they’re using it and why,” he says. “And we need to educate them about risks and boundaries.” The ethical burden, in his view, cannot fall solely on users or founders. It must be shared.

Ultimately, Turnbull hopes AI never becomes central to emotional well-being. “Most of what makes therapy effective is the relationship,” he says. “That connection is one-sided with AI, even if it feels real.” In mental health, perception without reciprocity is not care.

Expanding Access Through AI-Powered Coaching

As a co-founder of CoupleWork, Tony Fabrikant did not set out to build a mental health product. Coming from fintech, he entered generative AI through experimentation, not ideology. It was only when he shared early voice prototypes with friends that something unexpected surfaced. “It understood the nuance of people’s personal situations,” he says. “It could act as an emotional translator.”

That insight collided with self-awareness. “I realized I had no business doing this alone,” Fabrikant says. Relationship care demanded clinical grounding. His call to Clay Cockrell, a psychotherapist with 25 years of experience, was less a pitch than a moral checkpoint. “I asked him if this was ethical, legal, safe,” Fabrikant says. “I wouldn’t do it without him.”

Cockrell’s motivation was shaped by a stark statistic. “90% of couples who need counseling don’t get it,” he says. Cost, access, and stigma block the door. For him, AI offered a chance to widen the entrance without pretending to replace therapy. “Relationships are the building block of humanity,” Cockrell says. “If you get that right, everything else can follow.”

Their product, an AI relationship coach named Maxine, is intentionally framed as coaching, not therapy. “Marriage counseling is very coachy,” Cockrell explains. “It’s about technique and exercises.” That distinction is reinforced by aggressive safety protocols. “From the beginning, it had to be about safety,” he says. References to self-harm, violence, or abuse trigger immediate intervention and redirection to human resources.

Fabrikant describes the system as obsessed with guardrails. “We logged thousands of hours of testing,” he says. “Every change goes back through safety regression.” Maxine is rate-limited, non-addictive by design, and explicitly pro-human relationship. “Some AI companions optimize for time on app,” Fabrikant says. “We do the opposite.”

The most revealing insight, they note, is not how much users talk to AI, but why. Many feel freer disclosing sensitive issues without judgment. “There’s a freedom to talking to an AI,” Cockrell says. “It unlocks things people struggle to say out loud.” The goal is not to replace therapy, but to lower the threshold for seeking help, responsibly.

A Hybrid Path Forward

Mental health care does not need AI saviors. It needs systems that know their place. Across disciplines, the strongest voices agree on one principle: AI should handle the mundane so humans can do the meaningful.

Used with restraint, AI can reduce burnout, restore presence, and widen access without eroding trust. Used carelessly, it risks becoming an emotional authority without accountability. The difference is not technological capability, but ethical intent.

The future of mental health will not be automated. It will be augmented, selectively and cautiously. If AI succeeds here, it will not be because it learned to feel, but because it learned when not to speak.